AI Trading System

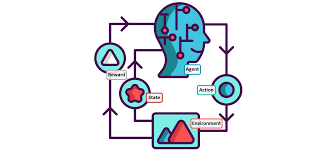

At XALT, we've developed a pioneering Deep Reinforcement Learning agent tailored for Stock Trading. This state-of-the-art prototype leverages advanced RL algorithms to navigate complex market conditions, utilizing single and 10-level depth order book data within an OpenAI Gym environment. By incorporating various technical indicators and implementing the State of the art DRL algorithms, our agent makes informed decisions, achieving desired trading behavior. Thorough backtesting validates its performance and efficacy, marking a significant advancement in algorithmic trading strategies.

Image Source